Let's talk!

We'de love to hear what you are working on. Drop us a note here and we'll get back to you within 24 hours.

We'de love to hear what you are working on. Drop us a note here and we'll get back to you within 24 hours.

The progressive app is developed which will assist people having diabetes to search for the information on the food they consume. Initially, the database will only have a few numbers of food items.

Axios, Fuse, Icons8, Pluralize, and Vue is some of the open-source projects used to create the app. Netlify is in charge of hosting the entire thing.

There are three components at action here:

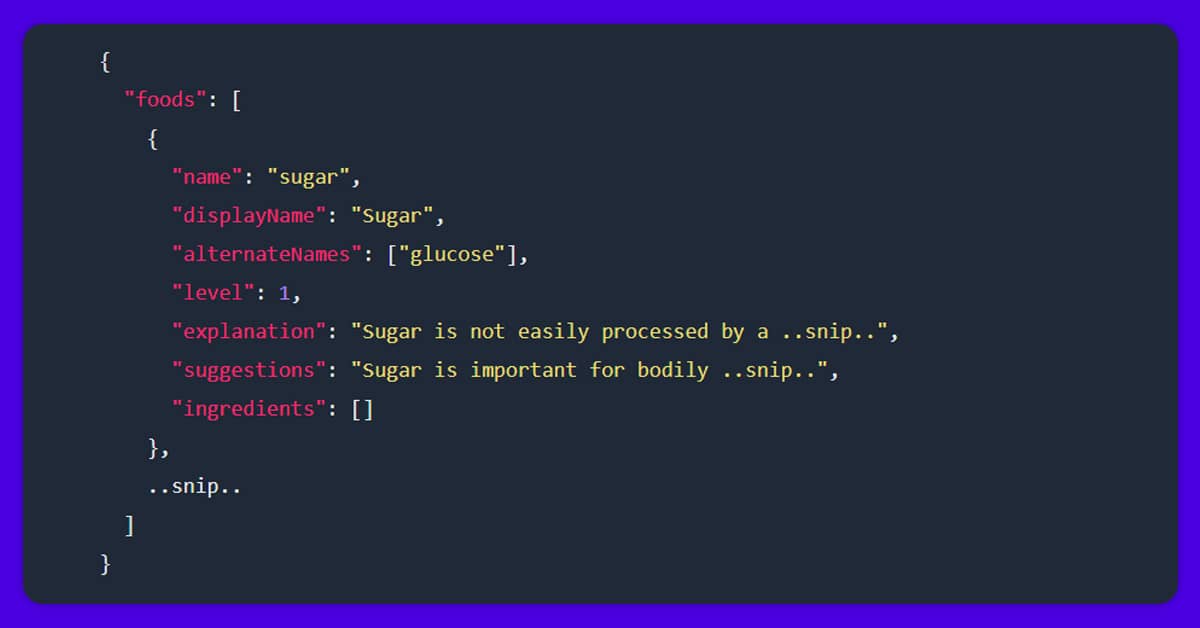

You can initiate by writing a strictly formatted ‘database’ using JSON. It will look like this:

Once you have the data structure, you can start building API. Thanks to Pluralize and Fuse, the search is rather straightforward.

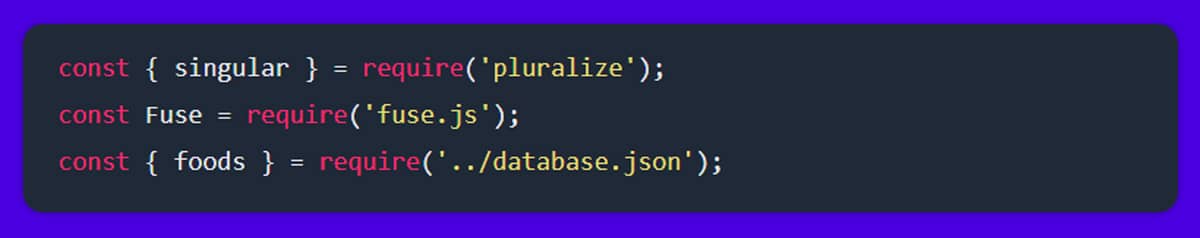

You can start by importing the dependencies…

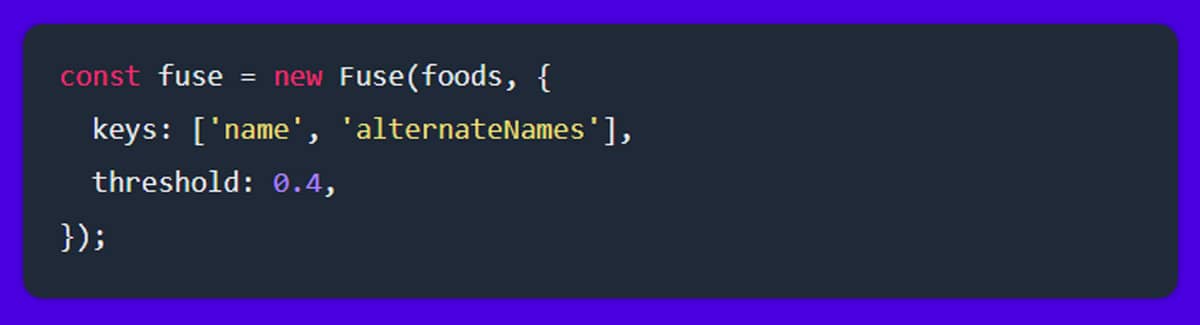

You can see that, in addition to Pluralize and Fuse, it is also possible to import the data. After that, you can build a search engine based on the data.

When users search, Fuse checks for partial and exact matches in the name and alternateNames keys. Now it’s time to get down to business.

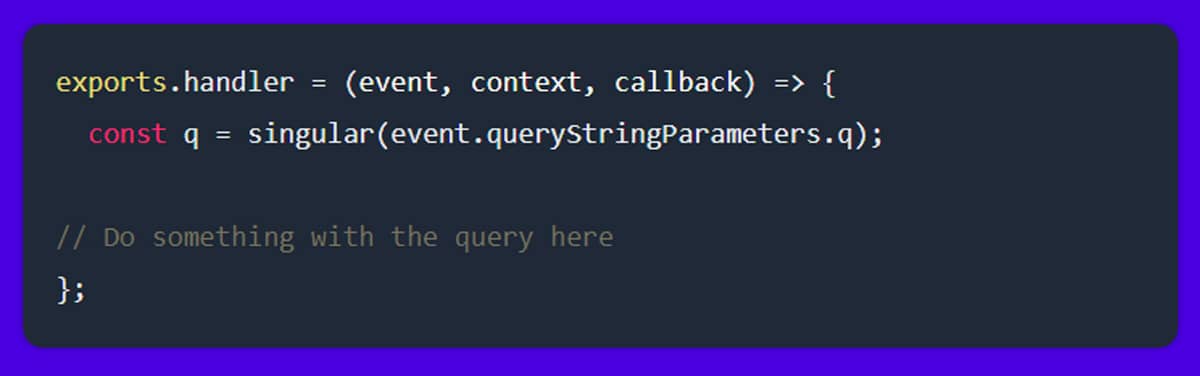

We extract the user’s question and singularize it within the main function. Pluralizes single () method changes “french fries” to “french fry,” which has a greater probability of matching a name in the database because those are all singular.

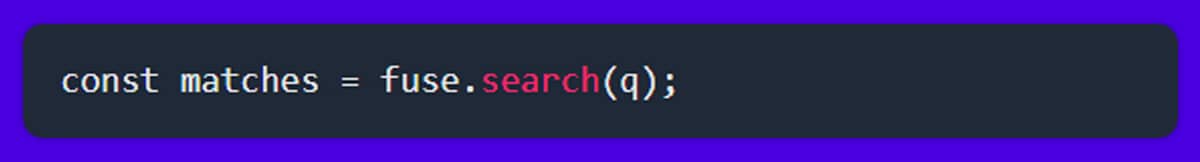

After that, we search with Fuse.

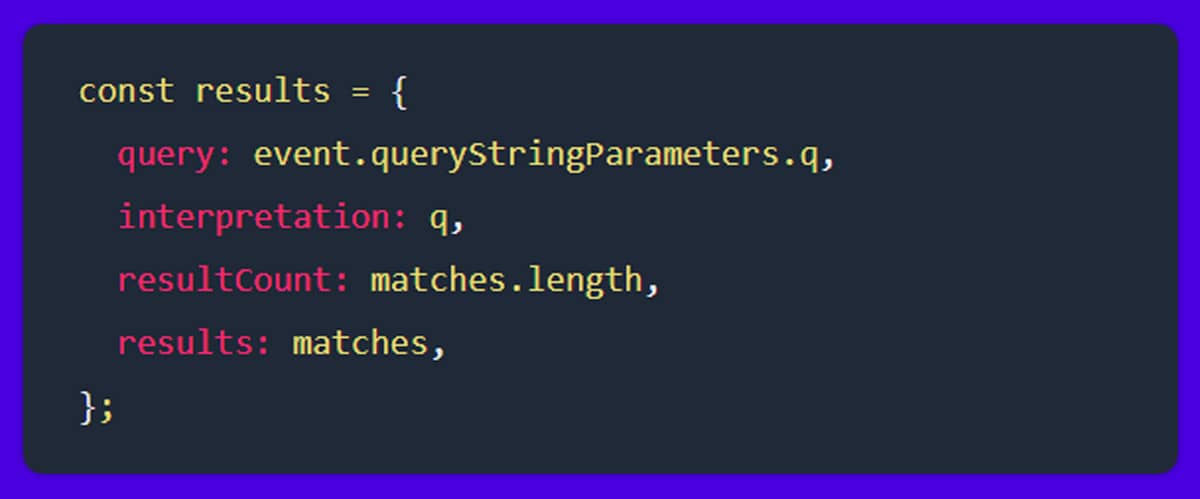

Then, you can prepare for the results:

When the API delivers results, it’s helpful to include some metadata, such as how the API processed the query (“french fry” vs. “french fries”) and how many results were retrieved. We finally react now that the results object is available.

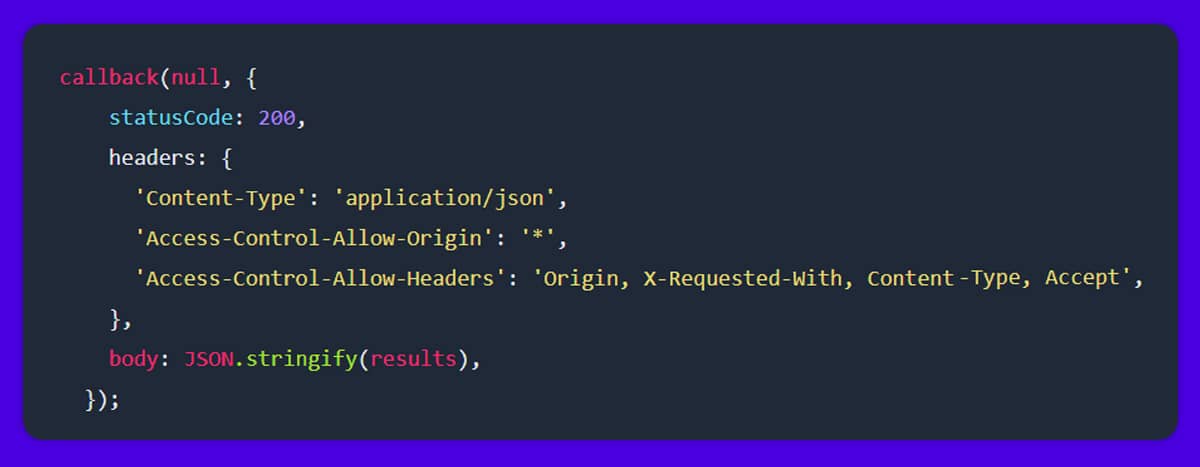

We inform the client that the request was successful (statusCode: 200), change the content type to JSON, and provide CORS headers so that the API may be used from anywhere. The stringified results make up the body of the answer. The back-end search API is now complete!

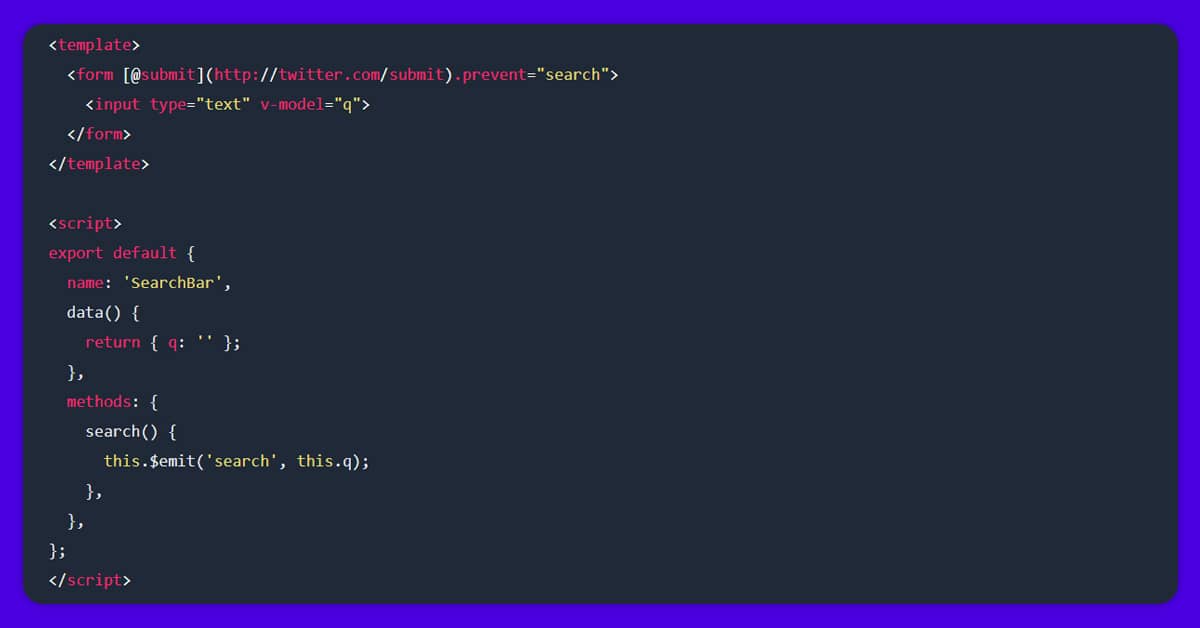

You utilized Vue single-file modules for the front-end. You can a bespoke solution, however, the Vue CLI is the simplest way to get started with Vue SFCs.

The app is divided into five sections:

Axios is used in the main app to query the API and put the results…

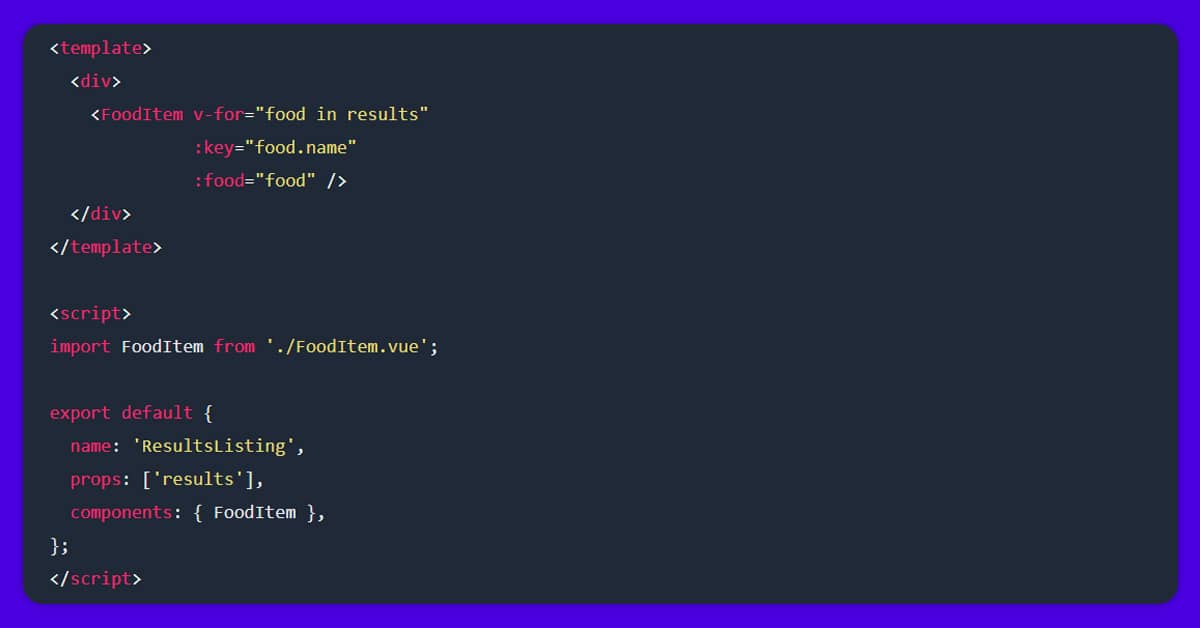

The results listing component loops over the results, producing a food item card for each one.

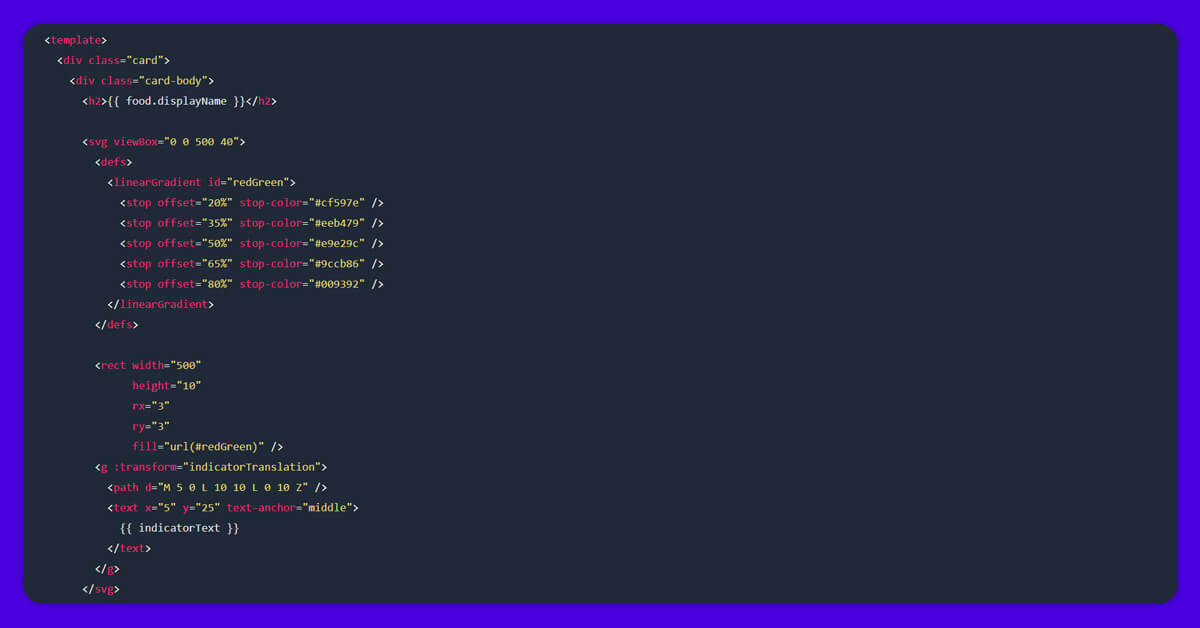

The data is shown on the food item cards, which include an SVG-based red-to-green food safety chart.

<template>

<div class="card">

<div class="card-body">

<h2>{{ food.displayName }}</h2>

<svg viewBox="0 0 500 40">

<defs>

<linearGradient id="redGreen">

<stop offset="20%" stop-color="#cf597e" />

<stop offset="35%" stop-color="#eeb479" />

<stop offset="50%" stop-color="#e9e29c" />

<stop offset="65%" stop-color="#9ccb86" />

<stop offset="80%" stop-color="#009392" />

</linearGradient>

</defs>

<rect width="500"

height="10"

rx="3"

ry="3"

fill="url(#redGreen)" />

<g :transform="indicatorTranslation">

<path d="M 5 0 L 10 10 L 0 10 Z" />

<text x="5" y="25" text-anchor="middle">

{{ indicatorText }}

</text>

</g>

</svg>

<h3>Explanation</h3>

<p>{{ food.explanation }}</p>

<h3>Suggestions</h3>

<p>{{ food.suggestions }}</p>

</div>

</div>

</template>

<script>

export default {

name: 'FoodItem',

props: ['food'],

computed: {

indicatorTranslation() {

const x = (this.food.level - 1) * 100 + 50;

return `translate(${x} 10)`;

},

indicatorText() {

const levels = ['Avoid', 'Caution', 'Okay', 'Good', 'Great'];

return levels[this.food.level - 1];

},

},

};

</script>

The chart is arguably the most difficult component to grasp. It consists of three components:

A linear gradient is used in the rectangle, which is defined in the SVG’s defs>.

When it comes to arranging the indication triangle and text, they are straightforward to grasp. I did this by grouping them with a g> and translating them using an equation.

It’s simple to convert the y-axis. Every time, the same thing happens. I changed it to a number of ten so that it would fall below the gradient rectangle.

However, the x-axis translation is determined by the food’s “level” (“Avoid,” “Caution,” and so forth).

Each dish has a level ranging from 1 to 5 in the database. The lowest number is one and the highest number is five. The sign for the poorest foods (1) will be towards the left (low x-axis number), while the indicator for the greatest foods (5) will be near the right (high x-axis number) (high x-axis number).

The width of the chart should be divided into “blocks.” We have 5 layers in our SVG chart, which is 500 pixels wide. 500 divided by five is equal to one hundred. Each block so has a width of 100 pixels.

Using this information, the calculation multiplies the level (1–5) by 100.

When we use that number as the indicator’s x-axis, it will be on the block’s very left edge. To correct this, we multiply by 50, which places the indication in the center of the block (100 / 2 = 50).

We’re nearly done, but the x-axis of our SVG chart starts at 0 and the levels begin at 1. This means that everything is offset by a “block” of data (100 pixels). To fix this, remove 1 from the level before continuing with the rest of the calculation.

The end product is a lovely card with a lovely graph.

For any web scraping services, contact Foodspark Scraping today!

Request for a quote!

January 21, 2025 B2B marketplaces use data collection to expand and maintain relevance in the food industry. Organizations can improve...

Read moreJanuary 2, 2025 Web scraping has now become an important strategy in the accelerating world of e-commerce, especially for businesses...

Read more